Introduction

Even though Microsoft has started to ignore WPF, the popularity of WPF for creating Windows applications is unbroken. This is natural, if we consider that the framework itself still has a lot of advantages and offers a lot of benefits. Additionally WPF gives us a rich coding model, which is still unmatched.

However, one of the disadvantages with WPF is the dependency on the underlying framework. This is nothing new, since every technology has this kind of disadvantage. If we are programming web applications, then we would also depend on the browser vendors. If we would program a micro-controller, we would also depend on the manufacturer.

In this article I will try to discuss the possibilities and problems that one will face when using multi-touch with WPF. The article itself will also try to illustrate various solutions. It should be said that the problem as stated in this article might actually be no problem for you or your project, however, for me personally it has been quite a troublemaker.

Background

About a year ago I finished my first touch focused application with WPF called Sumerics. The application had a nice looking UI and worked smoothly. However, when used multi-touch to pan or zoom the charts, I encountered a strange behavior. Basically the panning or zooming worked fine in the beginning, but then got more and more delayed. I thought that the chart control I was using (the amazing Oxyplot library) had a bug, but I was not able to find it. From my perspective the code looked ok and in some situations already optimized.

For a while I could just ignore this weird behavior, but in my latest project Quantum Striker I encountered this behavior again. I've been responsible for a game engine, audio and video, as well as a proper story line. The controller integration is a part of this game engine, hence touch has been integrated over WPF. What I experienced is that using the so-called strikers, which basically just represent two (permanent) touch points, will lead to a delay in the touch execution. This delay is practically zero at the beginning, but increases with the duration of the touch interaction.

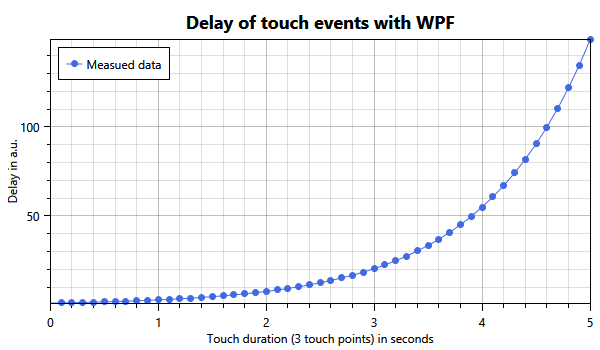

Hunting down this annoying bug I was able to find out, that it is actually WPF, which is not working properly. I created a small demonstration program that is able to showcase this behavior. If we use the application with just one finger we will not be able to notice any strong delays. Using the same application with more fingers, we will see that the delay gets stronger with the duration of the touch interaction. Additionally the number of fingers, i.e. the number of touch interactions, will have an impact on the slope of this curve.

Approaching multi-touch

WPF offers a variety of possibilities to hook on to the events of touch devices. All of those possibilities are event based. There is no option to get access to a push based API.

In general we are able to access the following events:

Touch*, likeTouchDownorTouchUpStylus*, likeStylusDownorStylusUpManipulation*, likeManipulationStartedorManipulationCompleted

As with some WPF events (in fact all routed events) we also have access to preview events, i.e. events that are triggered in the capture phase (and not in the bubbling phase). This capture phase is sometimes also called tunnelling phase, since the event just tunnels through most elements, until one element, called the origin, is captured.

Here we got the following Preview* events:

PreviewTouch*, likePreviewTouchDownorPreviewTouchUpPreviewStylus*, likePreviewStylusDownorPreviewStylusUp

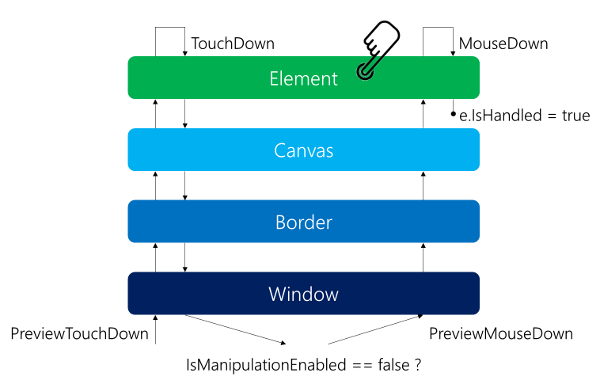

When we touch the screen, WPF generates receives messages and generates these events. Since touch events are all routed the Preview* event is fired on the root of the visual tree, going down the visual tree until the source element that the touch event occured is met.

Once this source element is reached, the event is fired and starts bubbling from the source element. This bubbling proceeds up the visual tree to the root. The event propagation could be stopped by setting e.IsHandled = true.

On the other hand, if the touch event propagation reaches all the way up to the root, then the touch event is promoted to a mouse event in order to guarantee backwards compatibility. At this point, PreviewMouseDown is fired for the tunnelling phase. Finally the MouseDown event is fired in the bubbling phase.

If we do not have a control or a window nearby, we can also access the touch input by using the static class Touch, that can be found in the System.Windows.Input namespace. This type is available by referencing the PresentationCore library.

Nevertheless this approach gives us also only access to hook on to an event, called FrameReported. Hooking on to this event we will receive data in our callback, that is given by the TouchFrameEventArgs class. The most important method of this class is called GetTouchPoints. This will basically give us access to the currently available touch points. Most importantly each touch point will be assigned to a touch device and every touch device gets an id in form of an integer. This makes it possible to track some fingers, as long as they stay on or near the surface.

The TouchPoint class contains properties like Action (gets the last action, i.e. what happened with this touch device), Position (gets the position of this point, relative to the control) and TouchDevice (gets useful information on this touch device, like IsActive or Id). This is all we need to get started with multi-touch on WPF.

The problem

Let's consider a simple problem of using two fingers to zoom in or out on a 3D plot. The WPF logic tree consists of several hundeds of objects, and the visual tree contains even more elements. WPF will now try to walk through the tree and evaluate the touch at each point. This is a massive overhead. Needless to say that no event handler is actually attached. While mouse events fire instantly, touch is handled differently.

It seems the touch events are not correctly disgarded when the UI is busy. Touch is generally more heavy than a mouse, since it generates more points and the points have a boundary box that symbolize the size of interaction. If the UI is busy then WPF shouldn't try to process the events by going down and up the tree, but instead buffer the points and store them in the collection of intermediate points.

This means that the main problem of WPF with touch lies in the frequency of touch events, coupled with UI processing and required processing needs. If the UI would not be that busy or the frequency would be reduced, WPF could probably handle the events nicely.

Additionally it is possible to use different kind of events. However, there does not seem to be a pattern. In some kind of scenarios the Stylus* events seem to perform better, but not in all scenarios. Also there is no pattern when going with those events is better and thus recommended.

Obviously it could be quite beneficial to outsource the touch input to a different layer, which does not result in any event tunnelling or bubbling at all. Additionally we might want to receive special touch treatments from the Windows API.

The delay of these events in shown in the next plot. Here we just marked the touch events with a timestamp and logged it in the debug log. Since the delay gets a constant offset in each turn, we have a cumulative sum, i.e. an exponential function. The time delay is shown in arbitrary units and might vary from system to system. However, the thing to note here is that the problem gets worse with time. It might saturate at some point in the future, but it will certainly be non-useable at this point.

In the next section we will have a look at possible solutions, with their pros and cons. In the end the solution that has been used in the recent project will be presented, with a short discussion and possible improvements.

Possible solutions

There is a variety of possible solutions. Some solutions might be more appropriate than others depending on the problem. If the interaction with the screen is multi-touch, however, limit to just a few seconds, a Stylus* event might be sufficient.

If we want to use the stylus events for e.g. drawing on a Canvas element, then the following code would be able to do the job.

Point pt1;

Point pt2;

Int32 firstId;

void StylusDown(Object sender, StylusEventArgs e)

{

if (canvas1 != null)

{

var id = e.StylusDevice.Id;

//Clear all lines

canvas1.Children.Clear();

//Capture the touch device (i.e. finger on the screen)

e.StylusDevice.Capture(canvas1);

// Record the ID of the first Stylus point if it hasn't been recorded.

if (firstId == -1)

firstId = id;

}

}

void StylusMove(Object sender, StylusEventArgs e)

{

if (canvas1 != null)

{

var id = e.StylusDevice.Id;

var tp = e.GetPosition(canvas1);

// This is the first Stylus point; just record its position.

if (id == firstId)

{

pt1.X = tp.X;

pt1.Y = tp.Y;

}

else if (id != firstId)

{

pt2.X = tp.X;

pt2.Y = tp.Y;

// Draw the line

canvas1.Children.Add(new Line

{

Stroke = new RadialGradientBrush(Colors.White, Colors.Black),

X1 = pt1.X,

X2 = pt2.X,

Y1 = pt1.Y,

Y2 = pt2.Y,

StrokeThickness = 2

});

}

}

}

void StylusUp(Object sender, StylusEventArgs e)

{

var device = e.StylusDevice;

if (canvas1 != null && device.Captured == canvas1)

canvas1.ReleaseStylusCapture();

}

Manipulation and general touch events are usually only good for single-touches. Additionally they seem to become quite laagy after a short while. The advantage with the stylus events is given by their special role in WPF. Since stylus interactions are point-wise and long-lasting, they are more lightweight and less laagy than the other possibilites.

Of course the solution that will finally be presented here is a little bit different. First we are going to assume that we require a solution that is long-lasting, reliable and still possible with WPF. Second we also want this solution to support as many fingers as possible (from the hardware side). Finally the solution should still allow other interactions, like keyboard or mouse events, to be used in our project if demanded.

The solution is to use a layer, that is created by using a Windows Forms Form instance, which will be above the critical region. This layer has to have several properties. First of all it needs to be transparent. Second, it is still required to be regarded as event target by Windows. Third, it should be the same size as our drawing canvas (or whatever element). And finally it should always come up on above our drawing canvas, no matter what actions have been taken before (minimizing, moving, ...). This consistency is quite important.

This solution is required, since only (for managed .NET applications) Windows Forms gives us full access to the message loop. Also a Form instance can be made transparent or placed on top of other windows.

We can also try to hook on messages from WPF. This is possible by using the HwndSource class. We can get the appropriate instance of this class by calling the static FromVisual() method of the PresentationSource class.

Some sample code that illustrates this:

public partial class MainWindow : Window

{

public MainWindow()

{

InitializeComponent();

}

protected override void OnSourceInitialized(EventArgs e)

{

base.OnSourceInitialized(e);

HwndSource source = PresentationSource.FromVisual(this) as HwndSource;

source.AddHook(WndProc);

}

IntPtr WndProc(IntPtr hwnd, int msg, IntPtr wParam, IntPtr lParam, ref bool handled)

{

// Handle message ...

return IntPtr.Zero;

}

}

However, this is not as direct as in Windows Forms. This has the drawback that WPF already did some preprocessing. This preprocessing already captured all events that are known to WPF and therefore is more or less useless for our purposes.

Using our own layer we will have an event API afterwards, which will allow us to replace the three event handlers from above (for the stylus approach), with the following two. We do not need to capture the touch as before, which is why we can omit the third handler, which was responsible for StylusUp events.

void WMTouchDown(Object sender, WMTouchEventArgs e)

{

if (canvas1 != null)

{

canvas1.Children.Clear();

var id = e.Id;

// Record the ID of the first Stylus point if it hasn't been recorded.

if (firstId == -1)

firstId = id;

}

}

void WMTouchMove(Object sender, WMTouchEventArgs e)

{

if (canvas1 != null)

{

var id = e.Id;

var tp = new Point(e.LocationX, e.LocationY);

// This is the first Stylus point; just record its position.

if (id == firstId)

{

pt1.X = tp.X;

pt1.Y = tp.Y;

}

else if (id != firstId)

{

pt2.X = tp.X;

pt2.Y = tp.Y;

canvas1.Children.Add(new Line

{

Stroke = new RadialGradientBrush(Colors.White, Colors.Black),

X1 = pt1.X,

X2 = pt2.X,

Y1 = pt1.Y,

Y2 = pt2.Y,

StrokeThickness = 2

});

}

}

}

The API looks quite similar, which is quite an important feature of the facade we are building behind. The trigger events we need to hook on the message loop in our own Form. The message number is 0x0240, which is stored in the Unmanaged class. This class stores all important utilities to do the communication with native code, i.e. knowing structures, values and functions.

[PermissionSet(SecurityAction.Demand, Name = "FullTrust")]

protected override void WndProc(ref Message m)

{

var handled = false;

if (m.Msg == Unmanaged.WM_TOUCH)

handled = DecodeTouch(ref m);

base.WndProc(ref m);

if (handled)

m.Result = new IntPtr(1);

}

This trigger also handles touch events. The handling is realized in the HandleTouch() method. Basically this method will just have a look at the contained touch information. If event handlers are available, parameters are computed and the event is fired.

Boolean HandleTouch(ref Message m)

{

//Get the input count from the WParam value

var inputCount = LoWord(m.WParam.ToInt32());

//Create an array of touchinput structs with the size of the computed input count

var inputs = new Unmanaged.TOUCHINPUT[inputCount];

//Stop if we could not create the touch info

if (!Unmanaged.GetTouchInputInfo(m.LParam, inputCount, inputs, touchInputSize))

return false;

var handled = false;

//Lets see if we can handle this by iterating over all touch points

for (int i = 0; i < inputCount; i++)

{

var input = inputs[i];

//Get the appropriate handler for this message

EventHandler<WMTouchEventArgs> handler = null;

if ((input.dwFlags & Unmanaged.TOUCHEVENTF_DOWN) != 0)

handler = WMTouchDown;

else if ((input.dwFlags & Unmanaged.TOUCHEVENTF_UP) != 0)

handler = WMTouchUp;

else if ((input.dwFlags & Unmanaged.TOUCHEVENTF_MOVE) != 0)

handler = WMTouchMove;

//Convert message parameters into touch event arguments and handle the event

if (handler != null)

{

var pt = parent.PointFromScreen(new win.Point(input.x * 0.01, input.y * 0.01));

var te = new WMTouchEventArgs

{

//All dimensions are 1/100 of a pixel; convert it to pixels.

//Also convert screen to client coordinates

ContactY = input.cyContact * 0.01,

ContactX = input.cxContact * 0.01,

Id = input.dwID,

LocationX = pt.X,

LocationY = pt.Y,

Time = input.dwTime,

Mask = input.dwMask,

Flags = input.dwFlags,

Count = inputCount

};

//Invoke the handler

handler(this, te);

//Alright obviously we handled something

handled = true;

}

}

Unmanaged.CloseTouchInputHandle(m.LParam);

return handled;

}

We will never use this code directly, but communicate with it by using a small API. This API will also not expose any Windows Forms classes, i.e. that projects using this layer will not have any direct dependencies any Windows Forms assembly.

Using the code

The supplied sample project contains a library with the layer for specialized usage with a canvas element. The usage is not restricted to a canvas element - actually any FrameworkElement will do the job. However, some parts of the library might be too specialized for its original purpose, which is why modifications should be done if required.

Most demo applications in the source code show a canvas that is used for touch drawing. Each application in category uses a different kind of event source. Feel free to increase the finger count on observe delays on non-layered demonstrations.

The drawing demo looks like the following:

Additionally there is an ellipse modification demo included, which works with the Stylus* events. This demonstration could also run with any of the other techniques, however, it is important to showcase that the workload that is assigned to WPF actually matters. Also it is quite interesting to have another kind of interaction.

This demo looks like the following image (for three fingers on the screen):

The code of all demos is very similar and just for demonstration purposes. Using the layer library is quite simple. There is only one point of access possible: Using the WMInputLayerFactory class with the static Create() method.

A suitable usage is given by the following snippet, within the constructor of a WPF window.

public MainWindow()

{

InitializeComponent();

Loaded += (s, ev) =>

{

//Keep reference to the following object if you want to de-activate it at some point

var layer = WMInputLayerFactory.Create(canvas1);

layer.WMTouchDown += layer_WMTouchDown;

layer.WMTouchMove += layer_WMTouchMove;

layer.Active = true;

};

}

The layer is activated / deactivated by setting the boolean Active attribute to true / false.

Another WPF only way

Well, using the information from above and a little secret placed on a previously unknown location in the MSDN we can construct a WPF only solution. The solution has the advantage that no layer has to be managed. Additionally all other events (mouse, keyboard, ...) work out of the box.

The only drawback of this method is, that touch needs to be registered in a proper way. One could make a new Window called TouchWindow, however, the problem remains that previously introduced touch events will not work any more in everywhere in the application.

So what is this mysterious way about? First we need to run the following code (anywhere in our application, once we require very responsive multi-touch):

static void DisableWPFTabletSupport()

{

// Get a collection of the tablet devices for this window.

var devices = Tablet.TabletDevices;

if (devices.Count > 0)

{

// Get the Type of InputManager.

var inputManagerType = typeof(InputManager);

// Call the StylusLogic method on the InputManager.Current instance.

var stylusLogic = inputManagerType.InvokeMember("StylusLogic",

BindingFlags.GetProperty | BindingFlags.Instance | BindingFlags.NonPublic,

null, InputManager.Current, null);

if (stylusLogic != null)

{

// Get the type of the stylusLogic returned from the call to StylusLogic.

var stylusLogicType = stylusLogic.GetType();

// Loop until there are no more devices to remove.

while (devices.Count > 0)

{

// Remove the first tablet device in the devices collection.

stylusLogicType.InvokeMember("OnTabletRemoved",

BindingFlags.InvokeMethod | BindingFlags.Instance | BindingFlags.NonPublic,

null, stylusLogic, new object[] { (uint)0 });

}

}

}

}

The code above is taken from MSDN and it basically removes all stylus handlers by using reflection. There is really not much magic behind this code, however, it is a nice snippet without reflecting, doing research on naming and modifiers and such. Once this code is run no control, window or whatsoever will be able to capture stylus (or touch in general) events anymore. So where do we get our touch from?

This is the same concept as before with our layer. We use the information from before, that we can actually filter the message loop with WPF. This time (by disabling the stylus support) WPF does not interfere with our plans! Therefore this code will work.

protected override void OnSourceInitialized(EventArgs e)

{

base.OnSourceInitialized(e);

var source = PresentationSource.FromVisual(this) as HwndSource;

source.AddHook(WndProc);

RegisterTouchWindow(source.Handle, 0);

}

IntPtr WndProc(IntPtr hwnd, Int32 msg, IntPtr wParam, IntPtr lParam, ref Boolean handled)

{

if (msg == 0x0240) //WM_TOUCH

{

handled = HandleTouch(wParam, lParam);

return new IntPtr(1);

}

return IntPtr.Zero;

}

The HandleTouch method is actually quite similar to the one before. All in all we exclude the management of an extra layer by excluding the included touch events. For most applications requiring long-lasting multi-touch interactions this solution should be the best.

I updated the source with this demo. Caution Once the "disabled stylus" demo is shown, most other demos (excluding the layer demo) will not work any more. A restart of the demo application is then required.

An illustrative sample

I obviously prepared something for this section, however, I decided to put publish it in a standalone article. The thing is that the article about Quantum Striker is non-existing. Additionally there are many interesting things about the Quantum Striker game like a quite well-performing usage of NAudio for playing audio effects in a game or some physics behind the game.

Additionally there are some interesting things that have not even been used in production like a sprite manager. I think that giving the whole game its own article could be interesting. This also allows me to write about other features than just the multi-touch layer.

Finally Quantum Striker will probably also be released as a Windows Store app. Making the application portable might also be quite interesting for some people. In my opinion it is already quite interesting how much of the original code is portable without any efforts.

Points of Interest

Quantum Striker is now finished. If you want to find out more about this game, then either watch out video on YouTube or go to the official webpage, quantumstriker.anglevisions.com. The following image also leads to the official YouTube video:

Also I highly recommend the references. These links discuss the problem of WPF with delayed touch events. It is quite interesting to see how the whole topic developed. Unfortunately it seems like there won't be any advancements in the near future. In my opinion they are a great starting point for further reading.

History

- v1.0.0 | Initial Release | 04.12.2013

- v1.1.0 | Updated info on the real time stylus | 05.12.2013