Contents

- Introduction

- Background

- HTML5 parser

- CSS4 parser

- DOM implementation

- Performance

- Using the code

- Points of Interest

- References

- History

Introduction

Nowadays everything is centered around the web. We are always either downloading or uploading some data. Our applications are also getting more chatty, since users want to synchronize their data. On the other side the update process is getting more and more direct, by placing volatile parts of our application in the cloud.

The whole web movement does not only come along with binary data. The movement is mainly pushed by HTML, since most of the output will finally be rendered to some description code that is known as HTML. This description language is decorated with two nice additions: Styling in form of CSS3 (yet another description language) and scripting in form of JavaScript (officially specified as ECMAScript).

This unstoppable trend is now gaining momentum since more than a decade. Nowadays having a well-designed webpage is the cornerstone of every company. The good thing is that HTML is fairly simple and even people without any programming knowledge at all can create a page. In the most simple approximation we just insert some text in a file, and open it in the browser (probably after we renamed the file to *.html).

To make a long story short: Even in our applications we sometimes might need to communicate with a webserver to deliver some HTML. This is all quite fine and solved by the framework. We have powerful classes that handle the whole communication by knowing the required TCP and HTTP actions. However, once we need to do some work on the document we are basically lost. This is where AngleSharp comes into play.

Background

The idea for AngleSharp has been born about a year ago (and I will explain in the next paragraphs why AngleSharp goes beyond HtmlAgilityPack or similar solutions). The main reason to use AngleSharp is actually to have access to the DOM as you would have in the browser. The only difference is that in this case you will use C# (or any other .NET language). There is another difference (by design), which changed the names of the properties and methods from camel case to pascal case (i.e. the first letter is capitalized).

Therefore before we go into details of the implementation we need to have a look what's the long-term goal of AngleSharp. Actually there are several goals:

- Parsers for HTML, XML, SVG, MathML and CSS

- Create CSS stylesheets / style rules

- Return the DOM of a document

- Run modifications on the DOM

- *Provide the basis for a possible renderer

The core parser is certainly given by the HTML5 parser. A CSS4 parser is a natural addition, since HTML documents contain stylesheet references and style attributes. Having another XML parser that complies with the current W3C specification is a good addition, since SVG and MathML (which can also occur in HTML documents) can be parsed as XML documents. The only difference lies in the generated document, which has different semantics and a uses a different DOM.

The * point is quite interesting. The basic idea behind this is not that a browser that is written entirely in C# will be build upon AngleSharp (however, that could happen). The motivation here lies in creating a new, cross-platform UI framework, which uses HTML as a description language with CSS for styling. Of course this is a quite ambitious goal, and it will certainly not be solved by this library, however, this library would play an important in the creation of this framework.

In the next couple of sections we will walk through some of the important steps in creating an HTML5 and a CSS4 parser.

HTML5 parser

Writing an HTML5 parser is much harder than most people think, since the HTML5 parser has to handle a lot more than just those angle brackets. The main issue is that a lot of edge cases arise with not well-defined documents / document-fragments. Also formatting is not as easy as it seems, since some tags have to be treated different than others.

All in all it would be possible to write such a parser without the official specification, however, one either has to know all edge cases (and manage to bring them into code or on paper) or the parser will simple be only working on a fraction of all webpages.

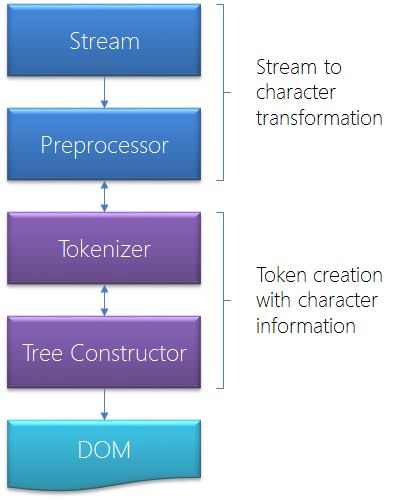

Here the specification helps a lot and gives us the whole range of possible states with every mutation that is possible. The main workflow is quite simple: We start with a Stream, which could either be directly from a file on the local machine, the data from the network or an already given string. This Stream is given to the preprocessor, which will control the flow of reading from the Stream and buffering already read contents.

Finally we are ready to hand in some data to the tokenizer, which transforms the data from the preprocessor to a sequence of useful objects. These temporary objects are then used to construct the DOM. The tree construction might have to switch the state of the tokenizer on several occasions.

The following image shows the general scheme that is used for parsing HTML documents.

In the following sections we will walk through the most important parts of the HTML5 parser implementation.

Tokenization

Having a working stream preprocessor is the basis for any tokenization process. The tokenization process is the basis for the tree construction, as we will see in the next section. What does the tokenization process do exactly? The tokenization process transforms the characters that have been processed by the input stream preprocessor to so-called tokens. Those tokens are objects, which are then used to construct the tree, which will be the DOM. In HTML there are not many different tokens. In fact we just have a few:

- Tag (with the name, an open/close flag, the tag's attributes and a self-closed flag)

- Doctype (with additional properties)

- Character (with the character payload)

- Comment (with the text payload)

- EOF

The state-machine of the tokenizer is actually quite complicated, since there are many (legacy) rules that have to be respected. Also some of the states cannot be entered from the tokenizer alone. This is also kind of special in HTML as compared to most parsers. Therefore the tokenizer has to be open for changes, which will usually be initiated by the tree constructor.

The most used token is the character token. Since we might need to distinguish between single characters (for instance if we enter a <pre> element an initial line feed character has to be ignored) we have to return single character tokens. The initial tokenizer state is the PCData state. The method is as simple as the following:

HtmlToken Data(Char c)

{

switch (c)

{

case Specification.AMPERSAND:

var value = CharacterReference(src.Next);

if (value == null) return HtmlToken.Character(Specification.AMPERSAND);

return HtmlToken.Characters(value);

case Specification.LT:

return TagOpen(src.Next);

case Specification.NULL:

RaiseErrorOccurred(ErrorCode.NULL);

return Data(src.Next);

case Specification.EOF:

return HtmlToken.EOF;

default:

return HtmlToken.Character(c);

}

}

There are some states which cannot be reached from the PCData state. For instance the Plaintext or RCData states can never be entered from the tokenizer alone. Additionally the Plaintext state can never be left. The RCData state is entered when the HTML tree construction detects e.g. a <title> or a <textarea> element. On the other side we also have a Rawtext state that could be invoked by e.g. a <noscript> element. We can already see that the number of states and rules is much bigger than we might initially think of.

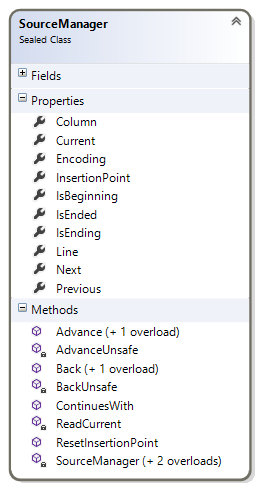

A quite important helper for the tokenizer (and other tokenizers / parsers in the library) is the SourceManager class. This class handles an incoming stream of (character) data. The definition is shown in the following image.

This helper is more a less a Stream handler, since it takes a Stream instance and reads it with a detected encoding. It is also possible to change the encoding during the reading process. In the future this class might change, since until now it is based on the TextReader class to read text with a given Encoding from a Stream. In the future it might be better to handle that with a custom class, that supports reading backwards with a different encoding out of the box.

Tree construction

Once we have a working stream of tokens we can start constructing the DOM. There are several lists we need to take care of:

- Currently open tags.

- Active formatting elements.

- Special flags.

The first list is quite obvious. Since we will open tags, which will include other tags, we need to memorize what kind of path we've taken along the road. The second one is not so obvious. It could be that currently open elements have some kind of formatting effect on inserted elements. Such elements are considered to be formatting elements. A good example would be the <b> tag (bold). Once it is applied all* contained elements will have bold text. There are some exceptions (*), but this is what makes the HTML5 non-trivial.

The third list is actually very non-trivial and impossible to reconstruct without the official specification. There are special cases for some elements in some scenarios. This is why the HTML5 parser distinguishes between <body>, <table>, <select> and several other sections. This differentiation is also required to determine if certain elements have to be auto-inserted. For instance the following snippet is automatically transformed:

<pre contenteditable>

The HTML parser does not recognize the <pre> tag as being a legal tag before the <html> or <body> tag. Thus a fallback is initialized, which first inserts the <html> tag and afterwards the <body> tag. Inserting the <body> tag directly within the <html> tag also creates an (empty) <head> element. Finally at the end of the file everything is closed, which implies that our <pre> node is also closed as it should be.

<html>

<head/>

<body>

<pre contenteditable=""></pre>

</body>

</html>

There are hard edge cases, which are quite suitable to test the state of the tree constructor. The following is a good test for finding out if the "Heisenberg algorithm" is working correctly and invoked in case of non-conforming usage of tables and anchor tags. The invocation should take place on inserting another anchor element.

<a href="a">a<table><a href="b">b</table>x

The resulting HTML DOM tree is given by the following snippet (without <html>, <body> etc. tags):

<a href="a">

a

<a href="b">b</a>

<table/>

</a>

<a href="b">x</a>

Here we see that the character b is taken out of the <table>. The hyperlink has therefore to start before the table and continue afterwards. This results in a duplication of the anchor tag. All in all those transformations are non-trivial.

Tables are responsible for some edge cases. Most of the edge cases are due to text having no cell environment within a table. The following example demonstrates this:

A<table>B<tr>C</tr>D</table>

Here we have some text that does not have a <td> or <th> parent. The result is the following:

ABCD

<table>

<tbody>

<tr/>

</tbody>

</table>

The whole text is moved before the actual <table> element. Additionally, since we have a <tr> element being defined, but neither <tbody> nor <thead> nor <tfoot>, a <tbody> section is inserted.

Of course there is more than meets the eye. A big part of the validate HTML5 parsing goes into error correction and constructing tables. Also the formatting elements have to fulfill some rules. Everyone who is interested in the details should take a look at the code. Even though the code might not be as readable as usual LOB application code, it should still be possible to read it with the appropriate comments and the inserted regions.

Tests

A very important point was to integrate unit tests. Due to the complicated parser design most of the work has not been dictated by the paradigm of TDD, however, in some parts tests have been placed before any line of code has been written. All in all it was important to place a wide range of unit tests. The tree constructor of the HTML parser is one of the primary goals of the testing library.

Also the DOM objects have been subject to unit tests. The main objective here was to ensure that these objects are working as expected. This means that errors are only thrown on the defined illegal operations and that integrated binding capabilities are functional. Such errors should never occur during the parsing process, since the tree constructor is expected to never try an illegal operation.

Another testing environment has been set up with the AzureWebState project, which aims to crawl webpages from a database. This makes it easy to spot a severe problem with the parser (like StackOverflowException or OutOfMemoryException) or potential performance issues.

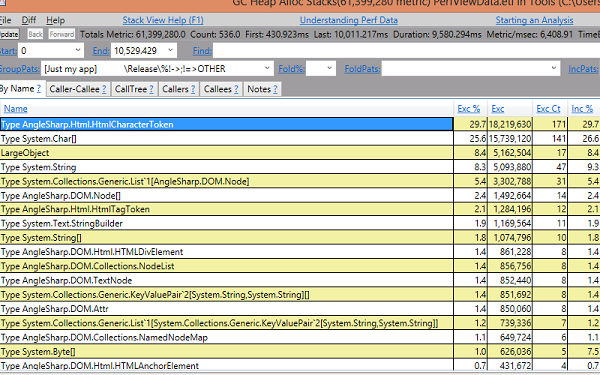

Reliability tests are not the only kind of tests we are interested in. If we need to wait too long for the parsing result we might be in trouble. Modern web browsers require between 1ms and 100ms for webpages. Hence everything that goes beyond 100ms has to be optimized. Luckily we have some great tools. Visual Studio 2012 provides a great tool for analyzing performance, however, for me in some scenarios PerfView seems to be the best choice (it works across the whole machine and is independent of VS).

A quick look at the memory consumption gives us some indicators that we might want to do something about allocating all those HtmlCharacterToken instances. Here a pool for character tokens could already be very beneficial. However, a first test showed, that the impact on performance (in terms of speed of processing) is negligible.

CSS4 parser

There are already some CSS parser out there, some of them written in C#. However, most of them make just a really simple parsing, without evaluating selectors or ignore the specific meaning of a certain property or value. Also most of them are way below CSS3 or do not support any @-rule (like namespace, import, ...) at all.

Since HTML is using CSS as its layout / styling language it was quite natural to integrate CSS directly. There are several places where this has been proven to be very useful:

- Selectors are required for methods like

QuerySelector. - Every element can have a

styleattribute, which has a non-string DOM representation. - The stylesheet(s) is / are considered by the DOM directly.

- The

<style>element has a special meaning for the HTML parser.

At the moment external stylesheets will not be parsed directly. The reason is quite simple: AngleSharp should require the least amount of external references. In the most ideal case AngleSharp should be easy to port (or even to exist) as a portable class library (where the intersection would be between "Metro", "Windows Phone" and "WPF"). This might not be possible at the moment, due to using TaskCompletitionSource at certain points, but this is actually the reason why the whole library is not decorated with Task instances or even await and async keywords all over the place.

Tokenization

The CSS tokenizer is not as complicated as the HTML one. What makes the CSS tokenizer somewhat complex is that it has to handle a lot more types of tokens. In the CSS tokenizer we have:

- String (either in single or double quotes)

- Url (a string in the

url()function) - Hash (mostly for selectors like #abc or similar, usually not for colors)

- AtKeyword (used for @-rules)

- Ident (any identifier, i.e. used in selectors, specifiers, properties or values)

- Function (functions are mostly found in values, sometimes in rules)

- Number (any number like 5 or 5.2 or 7e-3)

- Percentage (a special kind of dimension value, e.g. 10%)

- Dimension (any dimensional number, e.g. 5px, 8em or 290deg)

- Range (range values create a range of unicode values)

- Cdo (a special kind of open comment, i.e.

<--) - Cdc (a special kind of close comment, i.e.

-->) - Column (personally I've never seen this in CSS:

||) - Delim (any delimiter like a comma or a single hash)

- IncludeMatch (the include match

~=in an attribute selector) - DashMatch (the dash match

|=in an attribute selector) - PrefixMatch (the prefix match

^=in an attribute selector) - SuffixMatch (the suffix match

$=in an attribute selector) - SubstringMatch (the substring match

*=in an attribute selector) - NotMatch (the not match

!=in an attribute selector) - RoundBracketOpen and RoundBracketClose

- CurlyBracketOpen and CurlyBracketClose

- SquareBracketOpen and SquareBracketClose

- Colon (colons separate names from values in properties)

- Comma (used to separate various values or selectors)

- Semicolon (mainly used to end a declaration)

- Whitespace (most whitespaces have only separation reasons - meaningful in selectors)

The CSS tokenizer is a simple stream based tokenizer, which returns an iterator of tokens. This iterator can then be used. Every method in the CssParserclass takes such an iterator. The great advantage of using iterators is that we can basically use any token source. For instance we could use another method to generate a second iterator based on the first one. This method would only iterate over a subset (like the contents of some curly brackets). The great advantage is that both stream advance, but we do not have to proceed in a very complicated token management.

Hence appending rules is as easy as the following code snippet:

void AppendRules(IEnumerator<CssToken> source, List>CSSRule> rules)

{

while (source.MoveNext())

{

switch (source.Current.Type)

{

case CssTokenType.Cdc:

case CssTokenType.Cdo:

case CssTokenType.Whitespace:

break;

case CssTokenType.AtKeyword:

rules.Add(CreateAtRule(source));

break;

default:

rules.Add(CreateStyleRule(source));

break;

}

}

}

Here we just ignore some tokens. In the special case of an at-keyword we start a new @-rule, otherwise we assume that a style rule has to be created. Style rule start with a selector as we know. A valid selector makes more constraints on the possible input tokens, but in general takes any tokens as input.

Quite often we want to skip any whitespaces to come from the current position to the next position. The following snippet allows us to do that:

static Boolean SkipToNextNonWhitespace(IEnumerator<CssToken> source)

{

while (source.MoveNext())

if (source.Current.Type != CssTokenType.Whitespace)

return true;

return false;

}

Additionally we also get the information if we reached the end of the token stream.

Stylesheet creation

The stylesheet is then created with all the information. Right now special rules like the CSSNamespaceRule or CSSImportRule are parsed correctly but ignored afterwards. This has to be integrated at some point in the future.

Additionally we only get a very generic (and meaningless) property called CSSProperty. In the future the generic property will only be used for unknown (or obsolete) declarations, while more specialized properties will be used for meaningful declarations like color: #f00 or font-size: 10pt. This will then also influence the parsing of values, which must take the required input type into consideration.

Another point is that CSS functions (besides url()) are not included yet. However, these are quite important, since the toggle() and calc() or attr() functions are getting used more and more these days. Additionally rgb() + rgba() and hsl() + hsla() or others are mandatory.

Once we hit an at-rule we basically need to parse special cases for special rules. The following code snippet describes this:

CSSRule CreateAtRule(IEnumerator<CssToken> source)

{

var name = ((CssKeywordToken)source.Current).Data;

SkipToNextNonWhitespace(source);

switch (name)

{

case CSSMediaRule.RuleName: return CreateMediaRule(source);

case CSSPageRule.RuleName: return CreatePageRule(source);

case CSSImportRule.RuleName: return CreateImportRule(source);

case CSSFontFaceRule.RuleName: return CreateFontFaceRule(source);

case CSSCharsetRule.RuleName: return CreateCharsetRule(source);

case CSSNamespaceRule.RuleName: return CreateNamespaceRule(source);

case CSSSupportsRule.RuleName: return CreateSupportsRule(source);

case CSSKeyframesRule.RuleName: return CreateKeyframesRule(source);

default: return CreateUnknownRule(name, source);

}

}

Let's see how the parsing for the CSSFontFaceRule is implemented. Here we see that we push the font-face rule to the stack of open rules for the duration of the process. This ensures that every rule gets the right parent rule assigned.

CSSFontFaceRule CreateFontFaceRule(IEnumerator source)

{

var fontface = new CSSFontFaceRule();

fontface.ParentStyleSheet = sheet;

fontface.ParentRule = CurrentRule;

open.Push(fontface);

if(source.Current.Type == CssTokenType.CurlyBracketOpen)

{

if (SkipToNextNonWhitespace(source))

{

var tokens = LimitToCurrentBlock(source);

AppendDeclarations(tokens.GetEnumerator(), fontface.CssRules.List);

source.MoveNext();

}

}

open.Pop();

return fontface;

}

Additionally we use the LimitToCurrentBlock method to stay within the current curly brackets. Another thing is that we re-use the AppendDeclarations method to append declarations to the given font-face rule. This is no general rule, since e.g. a media rule will contain other rules instead of declarations.

Tests

A very important testing class is represented by CSS selectors. Since these selectors are used on many occasions (in CSS, for querying the document, ...) it was very important to include a set of useful unit tests. Luckily the guys who maintain the Sizzle Selector engine (which is primarely used in jQuery) solved this problem already.

These tests look like the following three samples:

[TestMethod]

public void IdSelectorWithElement()

{

var result = RunQuery("div#myDiv");

Assert.AreEqual(1, result.Length);

Assert.AreEqual("div", result[0].NodeName);

}

[TestMethod]

public void PseudoSelectorOnlyChild()

{

Assert.AreEqual(3, RunQuery("*:only-child").Length);

Assert.AreEqual(1, RunQuery("p:only-child").Length);

}

[TestMethod]

public void NthChildNoPrefixWithDigit()

{

var result = RunQuery(":nth-child(2)");

Assert.AreEqual(4, result.Length);

Assert.AreEqual("body", result[0].NodeName);

Assert.AreEqual("p", result[1].NodeName);

Assert.AreEqual("span", result[2].NodeName);

Assert.AreEqual("p", result[3].NodeName);

}

So we compare known results with the result of our evaluation. Additionally we also care about the order of the results. This means that the tree walker is doing the right thing.

DOM implementation

The whole project would be quite useless without returning an object representation of the given HTML source code. Obviously we have two options:

- Defining our own format / objects

- Using the official specification

Due to the project's goal the decision was quite obvious: The created objects should have a public API that is identical / very similar to the official specification. Users of AngleSharp will therefore have several advantages:

- The learning curve is non-existing for people who are familiar with the DOM

- Porting of code from C# to JavaScript is even more simplified

- Users who are not familiar with the HTML DOM will also learn something about the HTML DOM

- Other users will probably learn something as well, since everything can be accessed by intellisense

The last point is quite important here. A huge effort of the project went into (beginning to do a little bit of) writing something that represents a suitable documentation of the whole API and functions. Therefore enumerations, properties and methods, along with classes and events are documented. This means that a variety of learning possibilities is available.

Additionally all DOM objects will be decorated with a special kind of attribute, called DOMAttribute or simply DOM. This attribute could help to find out which objects (additionally to the most common types like String or Int32) could be used in a scripting language like JavaScript. In a way this integrates the IDL that is used in modern browsers.

The attribute also decorates properties and methods. A special kind of property is an indexer. Most indexers are named item by the W3C, however, since JavaScript is a language that supports indexers we don't see this very often. Nevertheless even here the decoration has been placed, which lets us choose how to use it.

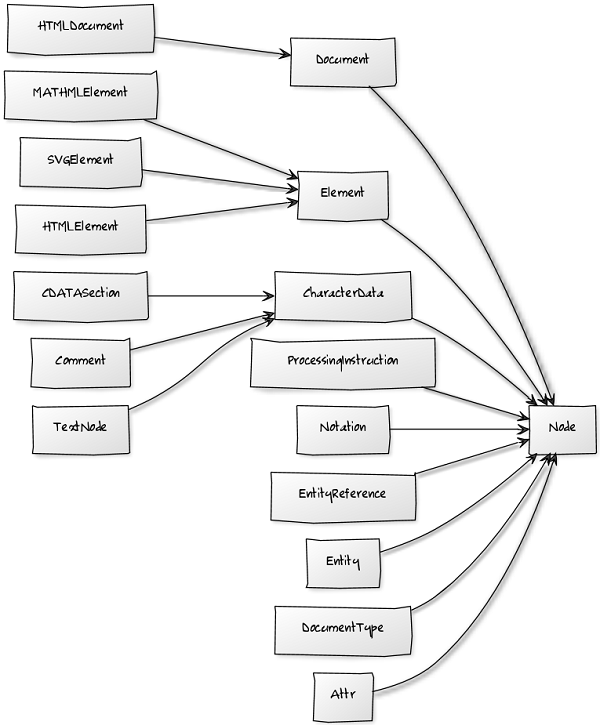

The basic DOM structure is displayed in the next figure.

It was quite difficult to find a truly complete reference. Even though the W3C creates the official standard, it is often in contradiction with itself. The problem is that the current specification is DOM4. If we take a look into any browser we will see that either not all elements there are available, or that additionally other elements are available. Using DOM3 as a reference points makes therefore more sense.

AngleSharp tries to find the right balance. The library contains most of the new API (even though not everything is implemented right now, e.g. the whole event system or the mutation objects), but also contains everything from DOM3 (or previous versions) that has been implemented and used across all major browsers.

Performance

The whole project has to be designed with performance in mind, however, this means that sometimes not very beautiful code could be found. Also everything has been programmed as close as possible to the specification, which has been the primary goal. The first objective was to apply the specification and create something that is working. After this has been achieved some performance optimization has been applied. In the end we can see that the whole parser is actually quite fast compared to the ones known from the big browsers.

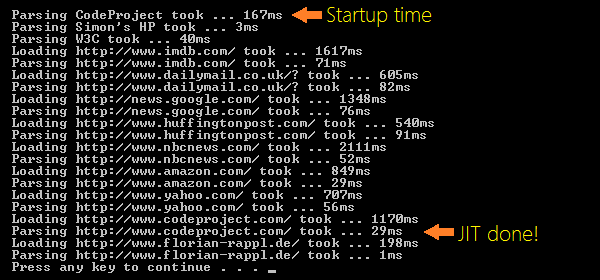

A big performance issue is the actual startup time. Here the JIT process is not only compiling the MSIL code to machine code, but also performing (necessary) optimizations. If we start some sample runs we can immediately see that the hot path are not optimized at all. The next screenshot shows a typical run.

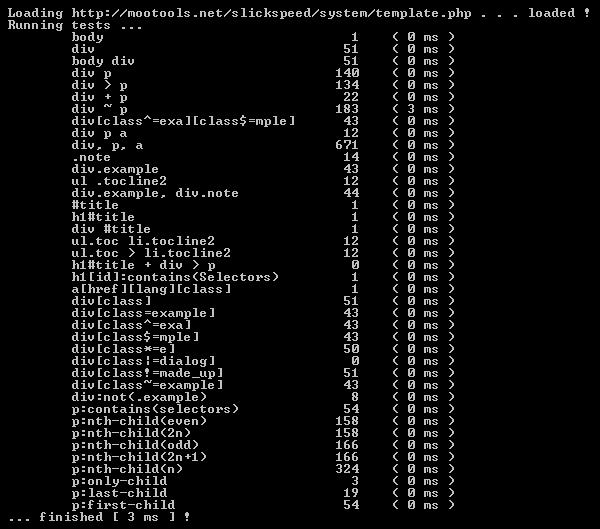

However, the JIT also does a great job at these optimizations. The code has been written in such a fashion that inlining and other crucial (and mostly trivial) optimizations are more likely to be performed by the JIT. A quite important speed test has been adopted from the JavaScript world: Slickspeed. This test shows us a whole range of data:

- The performance of our CSS tokenizer.

- The performance of our Selector creator.

- The performance of our tree walker.

- The reliability of our CSS Selectors.

- The reliability of our node tree.

On the same machine the fastest implementation in JavaScript makes heavy use of document.QuerySelectorAll. Hence our test is nearly a direct comparison against a browser (in this case Opera). The fastest implementation takes about 12ms in JavaScript. In C# we are able to have the same result in 3ms (same machine, release mode with a 64-bit CPU).

Caution This result should not convince you that C# / our implementation is faster than Opera / any browser, but that the performance is at least in a solid area. It should be noted that browsers are usually much more streamlined and probably faster, however, the performance of AngleSharp is quite acceptable.

The next screenshot has been taken while running the Slickspeed test. Please note that the real time aggregate is higher than 3ms, since the times have been added as integers to ensure an easy comparison with the original JavaScript Slickspeed benchmark.

In total we can say that the performance is already quite OK, even though no major efforts have been put into performance optimization. For documents of modest sizes we will be certainly far below 100ms and eventually (enough warm-up, document size, CPU speed) come close enough to 1ms.

Using the code

The easiest way to get AngleSharp is by using NuGet. The link to the NuGet package is at the end of the article (or just search for AngleSharp in the NuGet package manager official feed).

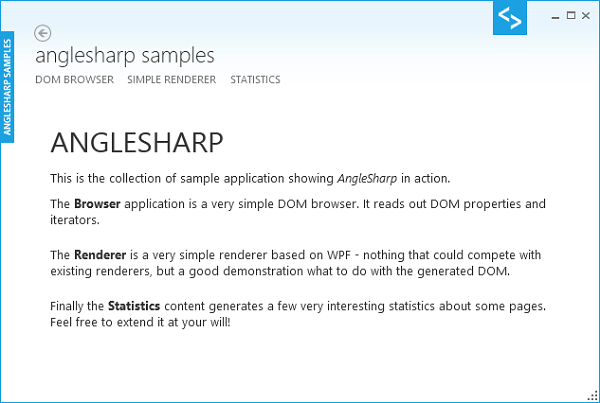

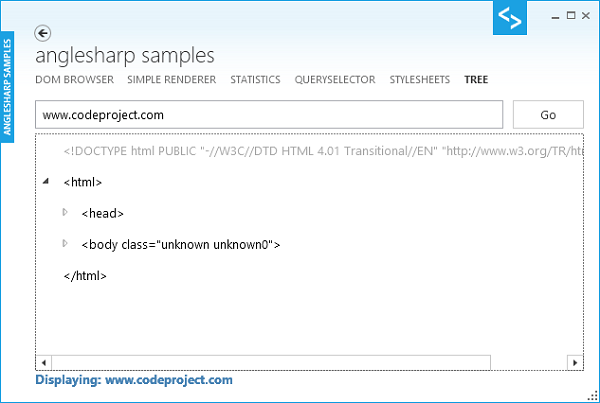

The solution that is available on the GitHub repository also contains a WPF application called Samples. This application looks like the following image:

Every sample uses the HTMLDocument instance in another way. The basic way of getting the document is quite easy:

async Task LoadAsync(String url, CancellationToken cancel)

{

var http = new HttpClient();

//Get a correct URL from the given one (e.g. transform codeproject.com to http://codeproject.com)

var uri = Sanitize(url);

//Make the request

var request = await http.GetAsync(uri);

cancel.ThrowIfCancellationRequested();

//Get the response stream

var response = await request.Content.ReadAsStreamAsync();

cancel.ThrowIfCancellationRequested();

//Get the document by using the DocumentBuilder class

var document = DocumentBuilder.Html(response);

cancel.ThrowIfCancellationRequested();

/* Use the document */

}

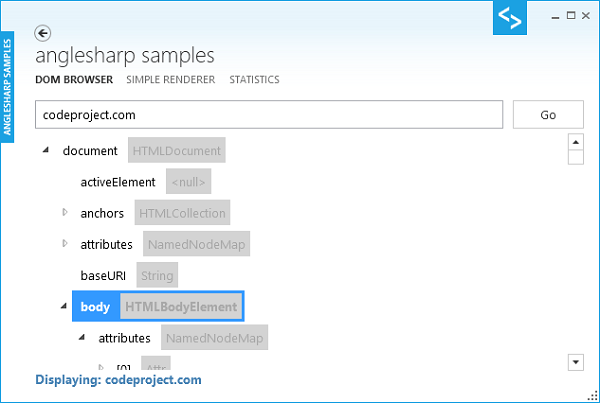

At the moment four sample usages are described. The first is a DOM-Browser. The sample creates a WPF TreeView that could be navigated through. The TreeView control contains all enumerable children and DOM properties of the document. The document is the HTMLDocument instance that has been received from the given URL.

Reading out these properties can be achieved with the following code. Here we assume that element is the current object in the DOM tree (e.g. the root element of a document like the HTMLHtmlElement or attributes like Attr etc.).

var type = element.GetType();

var typeName = FindName(type);

/* with the following definition:

FindName(MemberInfo member)

{

var objs = member.GetCustomAttributes(typeof(DOMAttribute), false);

if (objs.Length == 0) return member.Name;

return ((DOMAttribute)objs[0]).OfficialName;

}

*/

var properties = type.GetProperties(BindingFlags.Public | BindingFlags.Instance | BindingFlags.GetProperty)

.Where(m => m.GetCustomAttributes(typeof(DOMAttribute), false).Length > 0)

.OrderBy(m => m.Name);

foreach (var property in properties)

{

switch(property.GetIndexParameters().Length)

{

case 0:

children.Add(new TreeNodeViewModel(property.GetValue(element), FindName(property), this));

break;

case 1:

{

if (element is IEnumerable)

{

var collection = (IEnumerable)element;

var index = 0;

var idx = new object[1];

foreach (var item in collection)

{

idx[0] = index;

children.Add(new TreeNodeViewModel(item, "[" + index.ToString() + "]", this));

index++;

}

}

}

break;

}

}

Hovering over an element that does not contain items usually yields its value (e.g. a property that represents an int value would display the current value) as a tooltip. Next to the name of the property the exact DOM type is shown. The following screenshot shows this part of the sample application.

The renderer sample might sound interesting in the beginning, but in fact it just uses the WPF FlowDocument in a very rudimentary way. The output is actually not very readable and far away from the rendering that is done in other solutions (e.g. the HTMLRenderer project on CodePlex).

Nevertheless the sample shows how one could use the DOM to get information about various types of objects and use their information. As a little gimmick <img> tags are renderer as well, putting at least a little bit of color into the renderer. The screenshot has been taken while being on the English version of the Wikipedia homepage.

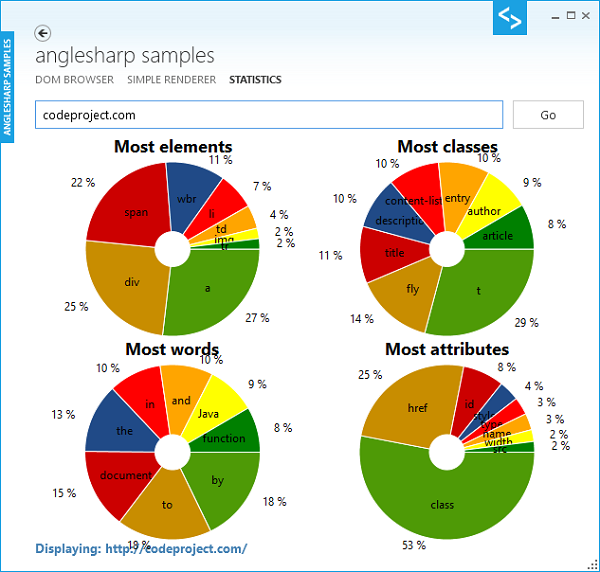

Much more interesting is the statistics sample. Here we gather data from the given URL. There are four statistics available, which might be more or less interesting:

- The top-8 elements (most used)

- The top-8 class names (most used)

- The top-8 attributes (most used)

- The top-8 words (most used)

The core of the statistics demo is the following snippet:

void Inspect(Element element, Dictionary<String, Int32> elements, Dictionary<String, Int32> classes, Dictionary<String, Int32> attributes)

{

//The current tag name has to be evaluated (for the most elements)

if (elements.ContainsKey(element.TagName))

elements[element.TagName]++;

else

elements.Add(element.TagName, 1);

//The class names have to be evaluated (for the most classes)

foreach (var cls in element.ClassList)

{

if (classes.ContainsKey(cls))

classes[cls]++;

else

classes.Add(cls, 1);

}

//The attribute names have to be evaluated (for the most attributes)

foreach (var attr in element.Attributes)

{

if (attributes.ContainsKey(attr.Name))

attributes[attr.Name]++;

else

attributes.Add(attr.Name, 1);

}

//Inspect the other elements

foreach (var child in element.Children)

Inspect(child, elements, classes, attributes);

}

This snippet is first used on the root element of the document. From this point on it will recursively call the method on its child elements. Later on the dictionaries can be sorted and evaluated using LINQ.

Additionally we perform some statistics on the text content in form of words. Here any word has to be at least 2 letters. For this sample OxyPlot has been used to display the pie charts. Obviously CodeProject likes to use anchor tags (who doesn't?) and a class called t (in my opinion very self-explanatory name!).

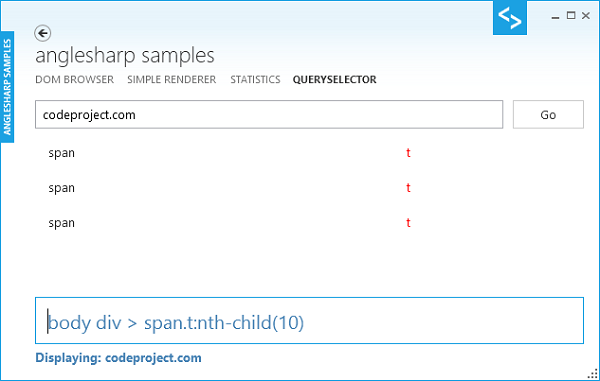

The final sample shows the usage of the DOM method querySelectorAll. Following the C# naming convention here use it like QuerySelectorAll. The list of elements is filtered as one enteres the selector in the TextBox element. The background color of the box indicates the status of the query - a red box tells us that an exception would be thrown due to a syntax error in the query.

The code is quite easy. Basically we take the document instance and call the QuerySelectorAll method with a selector string (like * or body > div or similar). Everyone who is familiar with the basic DOM syntax from JavaScript will recognize it instantly:

try

{

var elements = document.QuerySelectorAll(query);

source.Clear();

foreach (var element in elements)

source.Add(element);

Result = elements.Length;

}

catch(DOMException)

{

/* Syntax error */

}

Finally we take the list of elements (QuerySelectorAll gives us an HTMLCollection (which is a list of Element instances), while QuerySelector only returns one element or null) and push it to the observable collection of the viewmodel.

Update 1

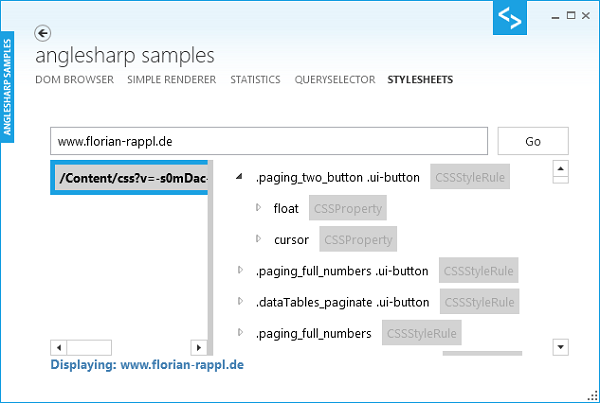

Another demo of interest might be the handling of stylesheets. The sample application has been updated with a short demo that reads out an arbitrary webpage and shows the available stylesheets (either <link> or <style> elements).

All in all the example looks like the following (with the available sources on the left and the stylesheet tree on the right).

Again the required code is not very complicated. In order to get the available stylesheets of an HTMLDocument object we only need to iterate over elements of its StyleSheets property. Here we will not obtain objects of type CSSStyleSheet but StyleSheet. This is a more general type as specified by the W3C.

for (int i = 0; i < document.StyleSheets.Length; i++)

{

var s = document.StyleSheets[i];

source.Add(s);

}

In the next part we actually need to create a CSSStyleSheet containing the rules. Here we have two possibilities:

- The stylesheet originates from a

<style>element and is inlined. - The stylesheet is associated with a

<link>element and has to be loaded from an external source.

In the first case we already have access to the source. In the second case we need to download the source code of the CSS stylesheet first. Since most of the time is going to be used by receiving the source code we need to ensure that our application stays responsive.

Finally we add the new elements (nodes with sub-nodes with possible sub-nodes with possible ...) in chunks of 100 - just to stay a little bit responsive while filling the tree.

var content = String.Empty;

var token = cts.Token;

if (String.IsNullOrEmpty(selected.Href))

content = selected.OwnerNode.TextContent;

else

{

var http = new HttpClient { BaseAddress = local };

var request = await http.GetAsync(selected.Href, cts.Token);

content = await request.Content.ReadAsStringAsync();

token.ThrowIfCancellationRequested();

}

var css = DocumentBuilder.Css(content);

for (int i = 0, j = 0; i < css.CssRules.Length; i++, j++)

{

tree.Add(new CssRuleViewModel(css.CssRules[i]));

if (j == 100)

{

j = 0;

await Task.Delay(1, cts.Token);

}

}

The deciding part is actually to use DocumentBuilder.Css for constructing the CSSStyleSheet object. In the CssRuleViewModel it is just a matter of distinguishing between the various rules to ensure that each one is displayed appropriately. There are three types of rules:

- Rules that contain declarations (use the

Styleproperty). Examples: Keyframe, Style. - Rules that contain other rules (use the

CssRulesproperty). Examples: Keyframes, Media. - Rules that do not contain anything (use special properties) or have special content. Examples: Import, Namespace.

The following code shows the basic differentiation between those types. Note that CSSFontFaceRule belongs to the third category - it has special content in form of a special set of declarations.

public CssRuleViewModel(CSSRule rule)

{

Init(rule);

switch (rule.Type)

{

case CssRule.FontFace:

var font = (CSSFontFaceRule)rule;

name = "@font-face";

Populate(font.CssRules);

break;

case CssRule.Keyframe:

var keyframe = (CSSKeyframeRule)rule;

name = keyframe.KeyText;

Populate(keyframe.Style);

break;

case CssRule.Keyframes:

var keyframes = (CSSKeyframesRule)rule;

name = "@keyframes " + keyframes.Name;

Populate(keyframes.CssRules);

break;

case CssRule.Media:

var media = (CSSMediaRule)rule;

name = "@media " + media.ConditionText;

Populate(media.CssRules);

break;

case CssRule.Page:

var page = (CSSPageRule)rule;

name = "@page " + page.SelectorText;

Populate(page.Style);

break;

case CssRule.Style:

var style = (CSSStyleRule)rule;

name = style.SelectorText;

Populate(style.Style);

break;

case CssRule.Supports:

var support = (CSSSupportsRule)rule;

name = "@supports " + support.ConditionText;

Populate(support.CssRules);

break;

default:

name = rule.CssText;

break;

}

}

Additionally declarations and values have their own constructors as well, even though they are not required to be as selective as the shown constructor.

Update 2

Another interesting (yet obvious) possibility of using AngleSharp is to read out the HTML tree. As noted before, the parser takes HTML5 parsing rules into account, which means that the resulting tree could be non-trivial (due to various exceptions / tolerances that have been applied by the parser).

Nevertheless most pages try to be as valid as possible, which does not require any special parsing rules at all. The following screenshot shows how the tree sample looks like:

The code for this could look less complicated than it does, however, it distinguishes between various types of nodes. This is done to hide new lines, which (as defined) ended up as text nodes in the document tree. Additionally multiple spaces are combined to one space character.

public static TreeNodeViewModel Create(Node node)

{

if (node is TextNode) //Special treatment for text-nodes

return Create((TextNode)node);

else if (node is Comment) //Comments have a special color

return new TreeNodeViewModel { Value = Comment(((Comment)node).Data), Foreground = Brushes.Gray };

else if (node is DocumentType) //Same goes for the doctype

return new TreeNodeViewModel { Value = node.ToHtml(), Foreground = Brushes.DarkGray };

else if(node is Element) //Elements are also treated specially

return Create((Element)node);

//Unknown - we don't care

return null;

}

The Element has to be treated differently, since it might contain children. Hence we require the following code:

static TreeNodeViewModel Create(Element node)

{

var vm = new TreeNodeViewModel { Value = OpenTag(node) };

foreach (var element in SelectFrom(node.ChildNodes))

{

element.parent = vm.children;

vm.children.Add(element);

}

if (vm.children.Count != 0)

vm.expansionElement = new TreeNodeViewModel { Value = CloseTag(node) };

return vm;

}

The circle closes when we look at the SelectFrom method:

public static IEnumerable<TreeNodeViewModel> SelectFrom(IEnumerable<Node> nodes)

{

foreach (var node in nodes)

{

TreeNodeViewModel element = Create(node);

if (element != null)

yield return element;

}

}

Here we just return an iterator that iterates over all nodes and returns the created TreeNodeViewModel instance, if any.

Points of Interest

When I started this project I have already been quite familiar with the official W3C specification and the DOM from a JavaScript programmer's point of view. However, implementing the specification did not only improve my knowledge about web development in general, but also about (possible) performance optimizations and interesting (yet widely unknown) issues.

I think that having a well-maintained DOM implementation in C# is definitely something nice to have for the future. I am currently busy doing other things, but this is a kind of project I will definitely pursue for the next couple of years.

This being said I hope that I could gain a little bit of attention and that some folks would be interested in committing some code to the project. It would be really nice to get a nice and clean (and as perfect as possible) HTML parser implementation in C#.

References

The whole work would not have been possible without outstanding documentation and specification supplied by the W3C. I have to admit that some documents seem to be not very useful or just outdated, while others are perfectly fine and up to date. It's also very important to question some points there, since (mostly only very small) mistakes can be found as well.

This is a list of the my personal most used (W3C and WHATWG) documents:

- HTML 5.1 Specification (Draft)

- CSS Syntax

- CSS Selectors

- DOM Specification

- Definitions of characters

- XML 1.1 Specification (Recommendation)

- XHTML Syntax

- CSS Selectors 4 (Draft)

- CSS 2.1 Properties

- WHATWG HTML Standard

Of course there are several other documents that have been useful (all of them supplied by the W3C or the WHATWG), but they list above is a good starter.

Additionally the following links might be helpful:

History

- v1.0.0 | Initial Release | 19.06.2013

- v1.1.0 | Added stylesheet sample | 22.06.2013

- v1.1.1 | Fixed some typo | 25.06.2013

- v1.2.0 | Added stylesheet sample | 04.07.2013